...

Select images on your computer

...

You can train your algorithms on 2D or multichannel images.

Depending on the training type you are willing to choose, you need to know in advance what image types and formats are supported:

Semantic Segmentation | Instance Segmentation | |

|---|---|---|

2D | 2D | Multichannel |

standard and WSI RGB and grayscale 8bit | standard and WSI RGB and grayscale 8bit and 16bit | standard and WSI RGB 8bit and 16bit max. 10 channels |

| Note |

|---|

Important: make sure your image file formats are supported by IKOSA Portal File formats |

| Note |

|---|

Important: Non-WSI formats of 2D and multichannel images are supported up to a maximum image size of 625 Megapixel (e.g. 25,000 x 25,000 Pixel). Training on multichannel images will be available soon. |

Login to IKOSA

Before you start training your own algorithms, you need to log in to IKOSA https://app.ikosa.ai/auth/login and navigate to the IKOSA AI page.

...

Upload images

Read our article on How-to upload images?

Annotate images

Read our article on How-to annotate an image? and How-to draw a ROI?

...

Before starting a new training, you can learn all about IKOSA AI here in this article. The link from the IKOSA AI Wizard leads you to this page.

...

Currently, IKOSA AI covers semantic and instance segmentation tasks only. However, image classification and object detection will also be supported in the near future.

...

4. Select labels

Select only the labels relevant to the training.

...

5. Select dataset split

In step 5 you have to first select your dataset split (random or manual split), followed by selecting your images in step 6.

When you use a random dataset split, you can select your images (at least two which contain annotations) all at once and apply a random split Random dataset split: selecting your images for training and validation . When you select a manual split, then you can select all at once.

Manual dataset split: selecting your images for training and validation separately.

...

| Info |

|---|

Please note:

|

| Note |

|---|

Important: if you have annotated your images mostly within ROI(s), then you should consider the option of training your algorithm on ROI(s) instead of the whole image. If an image does not contain any ROI(s) but you still select this function, the whole image will be used instead. |

6.

...

Select images

Select image type

Select between 2D and multichannel images.

...

Selecting images in random dataset split

...

80% of your selected images will be automatically assigned to the training set, while 20% of the images will be assigned to the validation set, on which the performance of your trained algorithm will be evaluated.

...

6. Option b: Manual dataset split and selecting your images

...

Selecting images in manual dataset split

Select at least 1 training and 1 validation image. Both of them must contain annotations.

| Note |

|---|

Important: If images are selected manually, the validation set has to:

If you are not sure how to conduct manual selection, then we would recommend applying random split since the manual selection of images might introduce unwanted bias. |

...

| Info |

|---|

Background recognitionAs stated above, images (or ROI(s)) without annotations of the selected labels can also be included in the training. This provides the algorithm training with a 'baseline' for a more reliable distinction between the background and features that need to be labeled. The algorithm will autonomously learn how the background differs from the foreground. Even if you do not provide separate background images, the algorithm will obtain information on what the background looks like based on the spaces in between annotations. Uploading images showing background only helps the algorithm learn what the background looks like, even when there are no objects with labels assigned present in the foreground. This also helps the algorithm learn what patterns form in the background around a foreground object. You should be careful what unannotated images you include in the training as examples for background only. If foreground objects are present in those images, the algorithm will later not be able to make a proper distinction between background and foreground areas. |

| Note |

|---|

Important: Make sure these images contain only background, as unlabeled features introduce counterfactual information into the trained algorithm. |

...

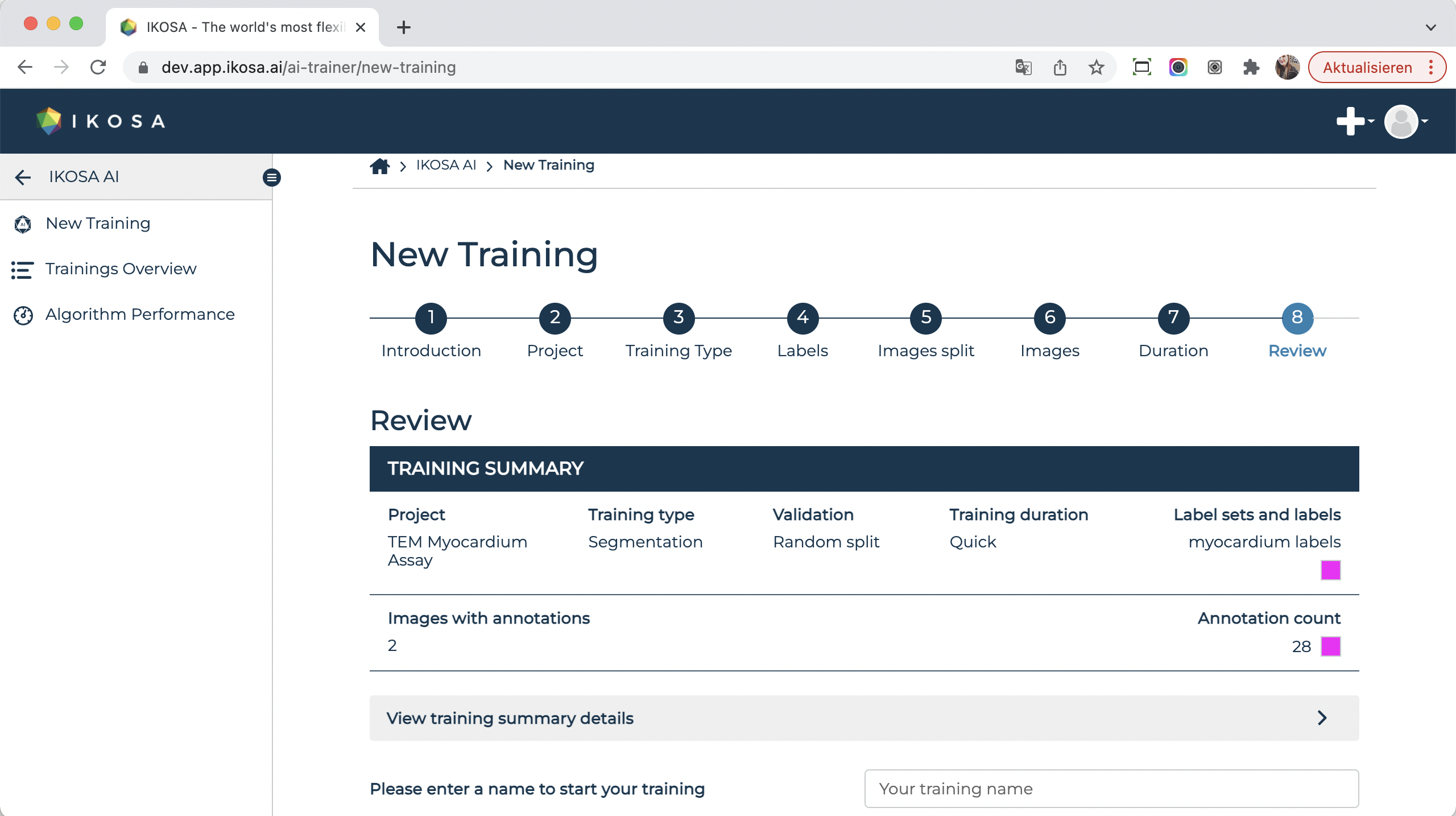

Review your selections before starting the training of your algorithm training.

Give your training a name and start with the training of your algorithm!

...

it!

...

Wait until the training is completed

You can track the progress of your active trainings. If multiple trainings have been started by you and/or other users (which may not be visible to you), trainings will be

| Info |

|---|

Please note: your training might be queued and an estimated time until the completion of the training is displayed. |

...

📑 Overview of the results

...

The downloadable zip folder contains:

the report as a PDF file with quantitative outcomes

...

qualitative results

...

as validation visualizations

...

...

If you are satisfied with the performance of the algorithm and have thoroughly checked the report, you can deploy your model for further use: How-to deploy and use a trained algorithm?

...